10 minutes

Hacking: attacking a PLC with Stuxnet

To illustrate how a PLC can be attacked and what the consequences might be, let’s look at the Stuxnet worm.;

Introduction to Stuxnet

Stuxnet is an independent worm, intended only for Siemens Supervisory Control and Data Acquisition (SCADA) systems. The worm was designed to attack specific Siemens PLCs and exploited four 0-day vulnerabilities. The final version of Stuxnet was first discovered in Belarus in June 2010 by Sergey Ulasen from Kaspersky Labs. An earlier version of Stuxnet was already discovered in 2009. However, the worm was developed much earlier, possibly as early as 2005. Stuxnet was primarily designed to damage a nuclear power plant located in Natanz, Iran. Unfortunately, however, Stuxnet has spread to over 115 countries, which shows that even a targeted attack could spread and cause damage outside of its primary purpose.

The worm was specially designed to change the rotor speed of the centrifuges inside the Natanz nuclear power plant, causing them to explode. What’s interesting with Stuxnet is that it was a targeted worm, carefully designed to cause damage only if certain criteria were met, meaning that most of the plants it subsequently spread to shouldn’t have suffered any damage. Indeed, Stuxnet would only increase the rotor speed of centrifuges if the industrial control system architecture matched the Natanz nuclear plant. Due to its structure and complexity, Stuxnet has been defined as an advanced persistent threat (APT). An APT collects data and executes commands continuously for an extended period of time undetected. This is also known as a “low and slow” attack.

How does it work

The Stuxnet worm was brought into Natanz’s facility via a USB flash drive, allowing it to attack the system from the inside. This was considered one of the prerequisites for the attack as the Natanz facility was not directly accessible from the Internet but could be accessed via an air gap.

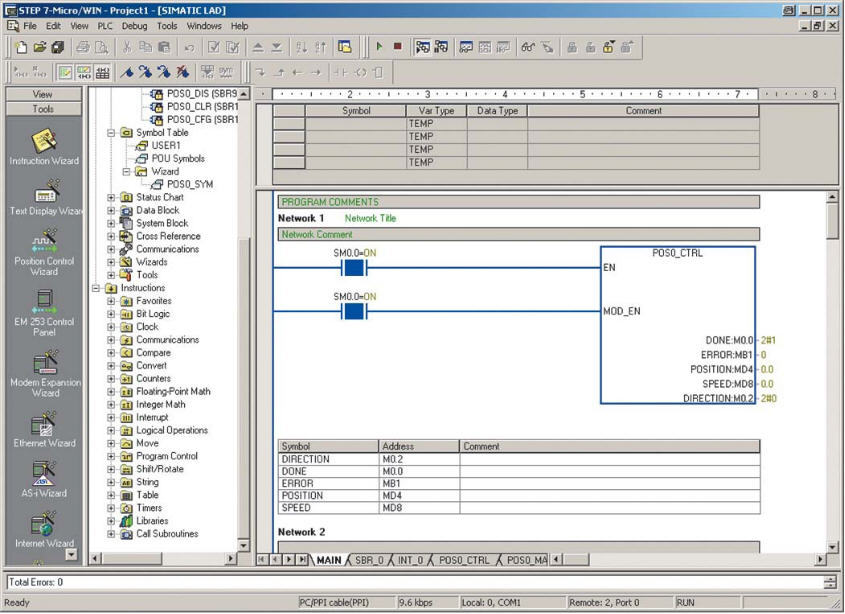

After execution, the worm spread throughout the network until it found a Windows operating system with STEP 7.

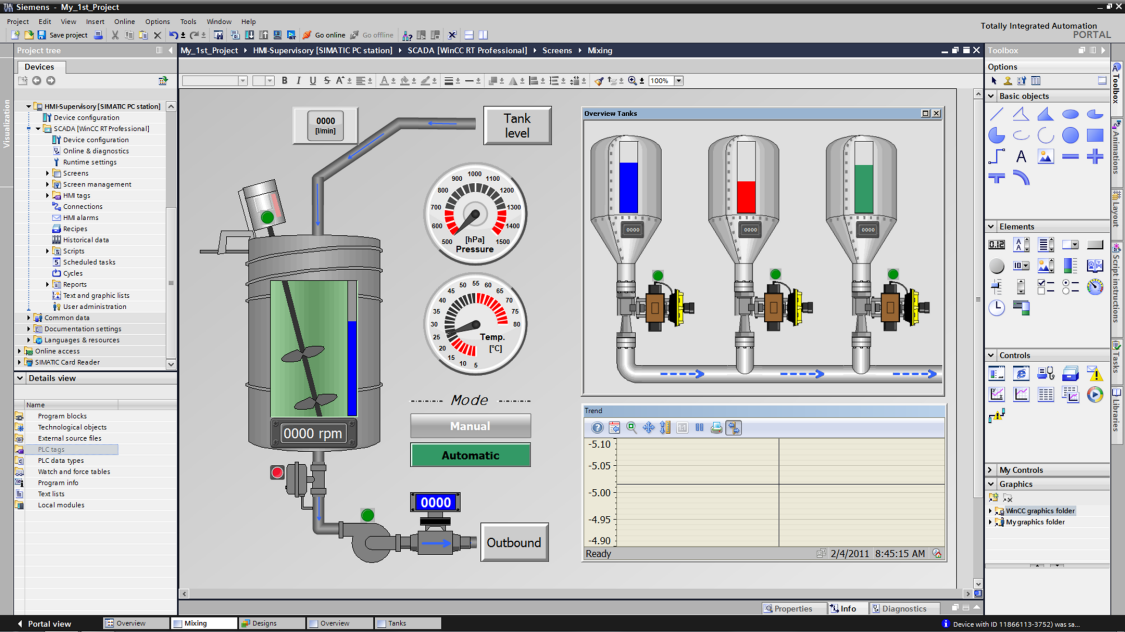

STEP 7 is Siemens programming software designed for their PLCs. The computer running STEP 7 is known as the controlling computer and interacts directly with and sends commands to the PLC. Once successfully arriving at the STEP 7 controlling computer, Stuxnet manipulated code blocks sent from the controlling computer, executed dangerous commands on the PLC, and spun the centrifuges at a higher frequency than originally programmed.

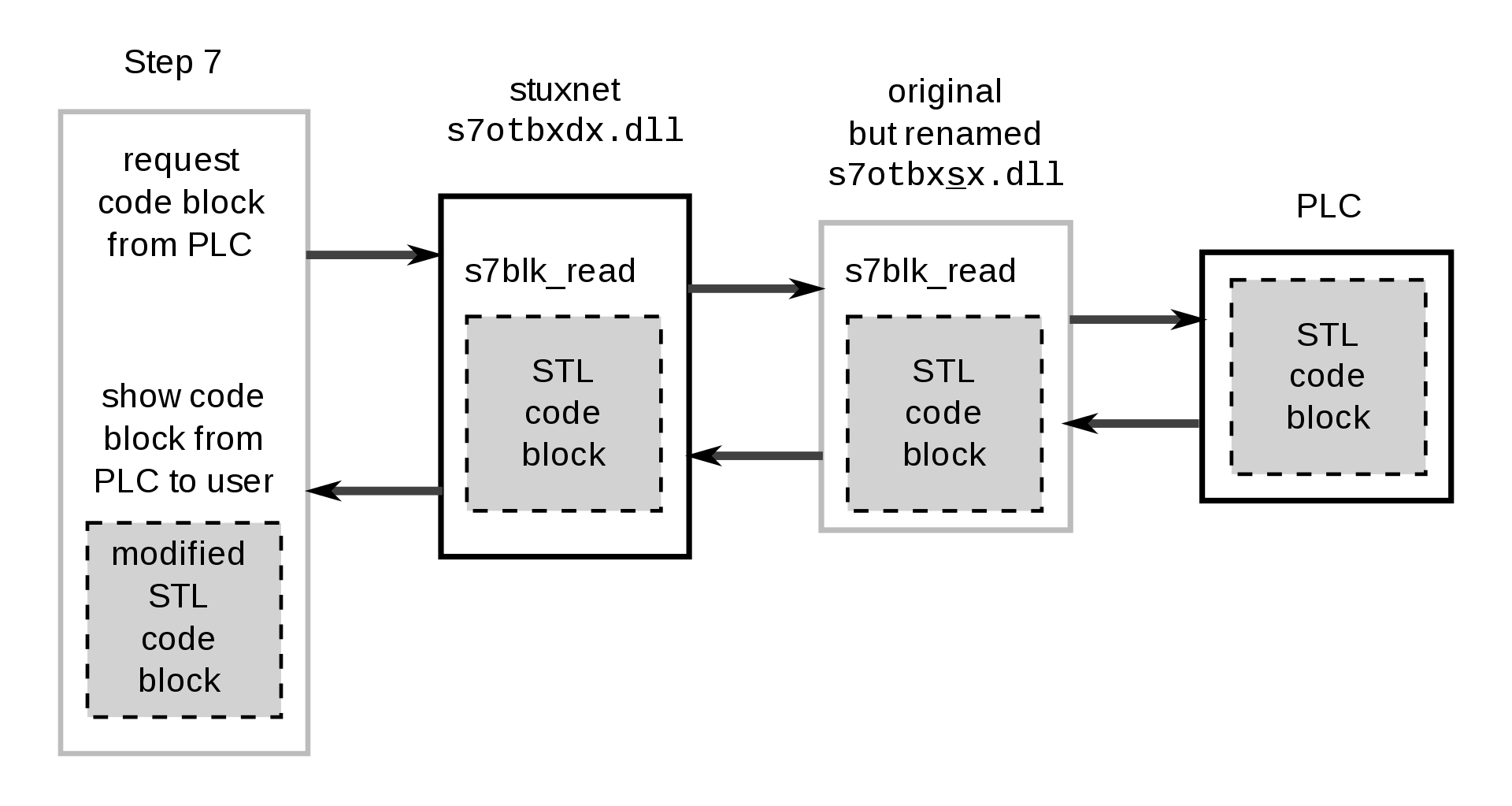

{% hint style=“info” %} The STEP 7 software uses a library called s7otbxdx.dll to communicate with the PLC. For example, if a block of code is to be read by the PLC, the “s7blk_read” routine is called. Stuxnet uses a DLL hijacking technique to intercept all commands coming from STEP 7 and WinCC (Siemens’ SCADA system) and, in turn, forwards them to the original library after modifying their contents. In this way it sends unexpected commands to the PLC returning a loop of normal operating values to the users. {% endhint %}

Attacks on the PLC were performed approximately every 27 days to make the attack stealthy and hard to detect, which is a central part of an APT. Stuxnet also took over the controlling computer and displayed fake output on the STEP 7 software. This step of the attack was a key part known as “deception”. Engineers located at the nuclear power plant received no indication of errors, assuming the centrifuges were spinning at the correct frequency. Receiving false output in STEP 7, they assumed that the crash was caused by human error rather than malware. Stuxnet also hid the code directly on the PLC after the infection and was therefore also referred to as a PLC rootkit.

For its diffusion on the net Stuxnet has exploited one of the 0-day vulnerabilities of the Windows operating systems. In fact, it spread through the Server Message Block (SMB) file sharing protocol as documented in the vulnerability report CVE-2008-4250 in the National Vulnerability Database. The vulnerability allowed remote code execution by aggressively spreading on the local network. The worm had several other features, such as self-replication, updated itself using a command and control center, contained a Windows rootkit that hid its binaries, and attempted to bypass computer security systems (anti-virus and anti-malware).

The aftermath of his attack

Stuxnet is known as the world’s first digital weapon and destroyed about 1,000 centrifuges inside the Natanz plant. Cyberattacks that cause physical damage have revolutionized the way cybersecurity experts perform threat analysis, as well as the way PLC manufacturers design them.

If we want to find merit, Stuxnet swept away the belief that ICS systems were inviolable, secure because they were isolated and because they were so different from the traditional devices present in the IT world.

But the greatest consequence is determined by the fact that for the first time a government body, in this case the American one together with the Israeli one, has created a computer weapon capable of causing damage to people and things. Something like this had not yet been seen and of this magnitude, hackers and malwares had so far the objective of extorting money, stealing information or breaking political barriers, but none had exceeded the threshold of tangible damage.

Hacking a PLC

Part of Stuxnet was to use target PLCs as a hacking tool by using a PLC rootkit and manipulating the communication between the controlling computer (SCADA) and the PLC itself. By targeting both of these devices Stuxnet managed to achieve its goal and at the same time fooled the operators, thus having enough time to destroy the centrifuges. Stuxnet like APT is a sophisticated attack that requires significant information and resource gathering to execute. It is also necessary to have in-depth knowledge of the proprietary communication protocols in use and the architecture of the target PLCs, especially for implementing the rootkit. Its code is now publicly available and can be studied extensively.

An industrial control system (ICS) and a PLC make use of multiple communication protocols. Among the most used we find Profinet, Profibus and Modbus. Most of them were originally designed without built-in security measures, resulting in allowing remote code execution, packet sniffing, and replay attacks due to lack of authentication and encryption.

Profinet uses traditional Ethernet hardware, making it compatible with most equipment. Profinet is widely used in the automation industry and its design is based on the Open Systems Interconnection (OSI) model. Profinet allows bi-directional communication and is the preferred communication protocol for Siemens Simatic PLCs.

Profibus is an international communication standard for fieldbuses. It is used to connect multiple devices together and allows for two-way communication. There are two types of Profibus: Profibus Decentralized Peripherals (DP) and Profibus Process Automation (PA). One limitation with Profibus is that it is only able to communicate with one device at a time. The new version of Profibus is standardized in IEC 61158.

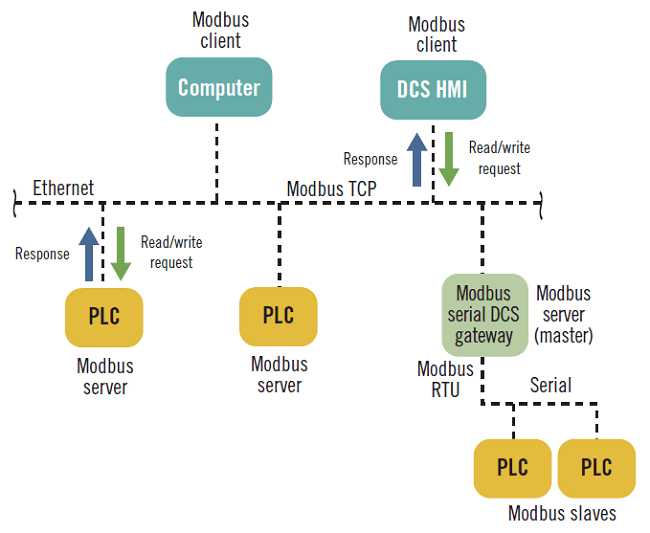

Modbus is a serial communication protocol designed and published by Modicon (Schneider Electric) in 1979. Modbus performs master and slave type communication, with a maximum number of devices up to 247. The control computer - HMI or SCADA - normally acts as master, while automation devices, or PLCs, are slaves. It was originally designed as a communication protocol for PLCs and later became an international standard for connecting multiple industrial devices. Modbus is easy to implement, inexpensive, and widely accepted as a communications standard. There are at least three variants of the Modbus protocol: American Standard Code for Information Interchange (ASCII), Remote Terminal Unit (RTU), and TCP/IP.

There are several Metasploit scanners that allow the detection and exploitation of Modbus and Profinet. Similar scanners in Python are also available on GitHub. In 2011 Dillon Beresford, senior vulnerability research engineer at Dell, launched remote exploits against Siemens’ Simatic PLC series via Profinet, which communicates using TCP port 102.

What is interesting about these exploits is that they dump and display memory, and are able to perform ON/OFF commands on the PLC’s central processing unit (CPU). One example is the exploit called “remote-memory-viewer”, which authenticates using a backdoor password encoded in Siemens’ Simatic S7-300 PLC. In this exploit, the start/stop module of the CPU runs shell code to the PLC and turns it on/off remotely. The same start/stop exploit can be found for the S7-1200 series. Furthermore, by injecting shellcode, it is also possible to get remote access to the PLC.

Due to the lack of sanity checks, older PLCs execute commands regardless of whether they are received from a legitimate source. The reason for this is that there are no checksums on the network packets. A number of replay attacks have been shown to work against a large number of PLCs, which allows the attacker to issue execution commands remotely. Therefore, exploiting PLCs remotely with open source tools poses a major threat to SCADA systems.;

During BlackHat USA in 2011, Beresford presented a live demo created for the Siemens Simatic S7-300 and 1200 series. The exploits used during his demo are programmed in Ruby, made compatible with the Metasploit Framework.

Remote exploits on ICS were an essential part of the Stuxnet worm. However, Beresford demonstrated how it is possible to gain remote access to a PLC using the hard-coded password built into the software, and in some ways it’s a step up from what was done at Stuxnet.

But does it only happen with Siemens?

Better to clarify: this is not exclusively a problem of Siemens. Rockwell Automation also experimented with a stack buffer overflow that could allow remote access to the system by injecting arbitrary code, according to CVE-2016-0868 of the National Vulnerability Database. The vulnerability was reported on January 26, 2016 and affected the MicroLogix 1100 PLC. Additionally, there are several other exploits and scanners available in the Metasploit project that can be used to remotely execute commands on different PLC models.

The controlling computer can also be used as a hacking tool mainly due to various software exploits, some of which grant workstation control of a SCADA or ICS system. This allows the attacker to manipulate the data sent to the PLC and pivot within the network. An exploit created by Exploit Database contributor James Fitts allows a remote attacker to enter arbitrary code into Fatek’s PLC programming software, WinProladder, as documented CVE-2016-8377 of the National Vulnerability Database.

While the attacker can activate the exploit remotely, it still requires user interaction, such as visiting a malicious web page or opening an infected file, to successfully exploit it. The exploit is a stack buffer overflow available in Ruby for Metasploit import. Applications programmed in C are often more vulnerable to buffer overflows than other programming languages, and there are many C-based software packages in use in industrial control systems. For example, shellcode injection through a buffer overflow vulnerability could allow remote access to the system or be used for privilege escalation.

We are doomed?

Lack of security in industrial control systems is a major security concern. A PLC was originally designed to function only as an auto operator in an industrial control system and not to be connected to external components and reachable from the internet. However, the evolution in ICS design has started to expose PLCs to the internet, which can be shown through research using tools like Shodan. PLCs rely on networks with “air-gapped” protection and limited physical access as a safety measure.

Air-gapped networks have repeatedly been shown to be a flawed design and are by no means a legitimate security argument in modern ICS. This was demonstrated by the Stuxnet attack, which spread to over 115 countries, infecting critical infrastructure around the world even though most control systems were designed in isolation. This change in ICS environments and critical infrastructure means that PLCs are exposed to a greater security threat than before.

(Thanks to the work of Siv Hilde Houmb and Erik David Martin from which this article is based)

Important note

This article is intended for educational and informational purposes only. Any unauthorized action towards any control system present on a public or private network is illegal! The information contained in this and other articles are intended to make people understand how necessary it is to improve defense systems, and not to provide tools for attacking them. Violating a computer system is punishable by law and can cause serious damage to property and people, especially when it comes to ICS. All the tests that are illustrated in the tutorials have been carried out in isolated, safe, or manufacturer-authorized laboratories.

Stay safe, stay free.